Hard Questions is a series from Facebook that addresses the impact of our products on society.

By Tessa Lyons, Product Manager

False news has long been a tool for economic or political gains, and we’re seeing new ways it’s taking shape online. Spammers can use it to drive clicks and yield profits. And the way it’s been used by adversaries in recent elections and amid ethnic conflicts around the world is reprehensible.

False news is bad for people and bad for Facebook. We’re making significant investments to stop it from spreading and to promote high-quality journalism and news literacy. I’m a product manager on News Feed focused on false news, and I work with teams across the company to address this problem.

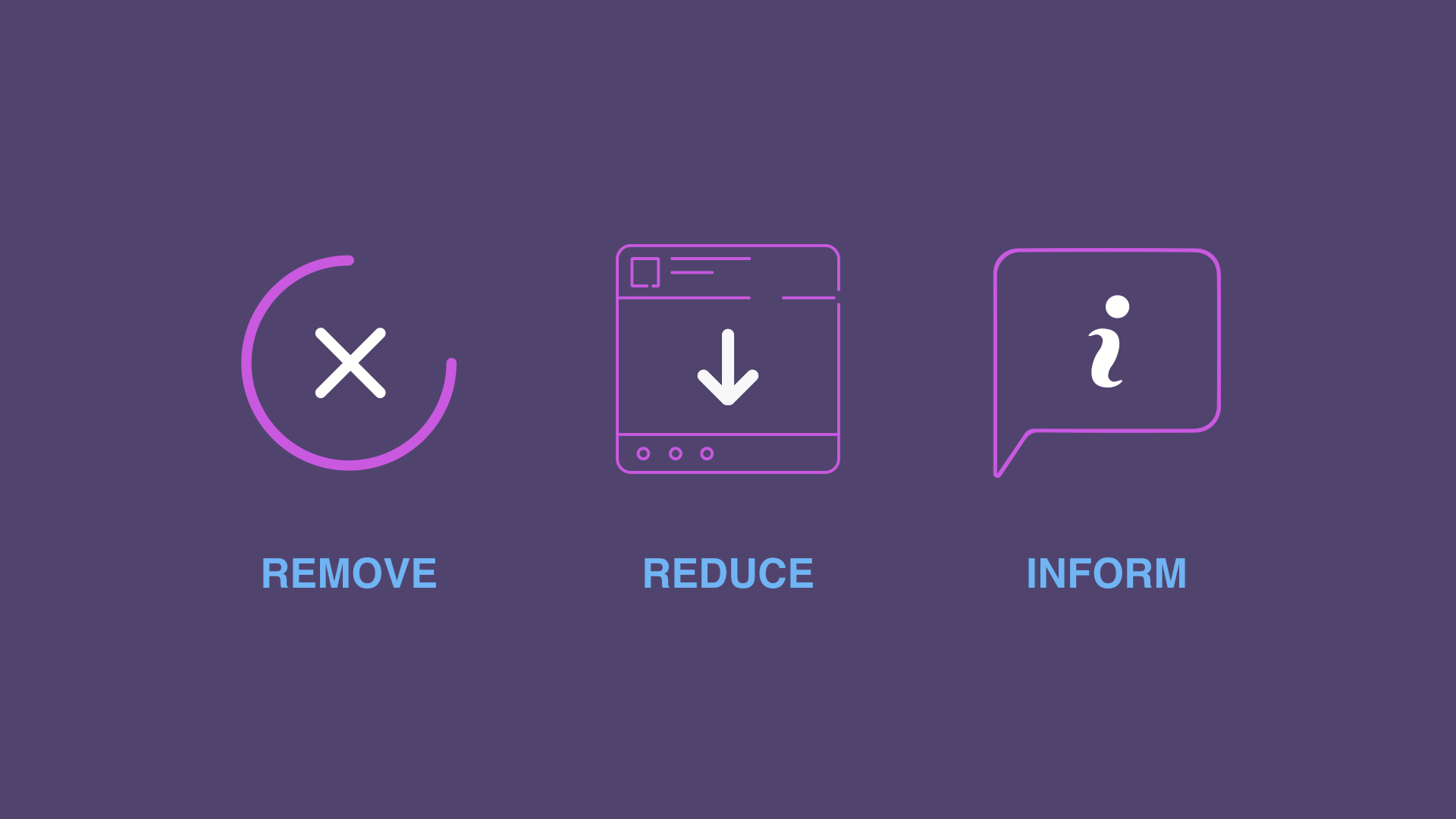

Our strategy to stop misinformation on Facebook has three parts:

- Remove accounts and content that violate our Community Standards or ad policies

- Reduce the distribution of false news and inauthentic content like clickbait

- Inform people by giving them more context on the posts they see

This approach roots out the bad actors that frequently spread fake stories. It dramatically decreases the reach of those stories. And it helps people stay informed without stifling public discourse. I’ll explain a little bit more about each part and the progress we’re making.

Removing accounts and content that violates our policies

Although false news does not violate our Community Standards, it often violates our polices in other categories, such as spam, hate speech or fake accounts, which we remove.

For example, if we find a Facebook Page pretending to be run by Americans that’s actually operating out of Macedonia, that violates our requirement that people use their real identities and not impersonate others. So we’ll take down that whole Page, immediately eliminating any posts they made that might have been false.

Over the past year we’ve learned more about how networks of bad actors work together to spread misinformation, so we created a new policy to tackle coordinated inauthentic activity. We’re also using machine learning to help our teams detect fraud and enforce our policies against spam. We now block millions of fake accounts every day when they try to register.

Reducing the spread of false news and inauthentic content

A lot of the misinformation that spreads on Facebook is financially motivated, much like email spam in the 90s. If spammers can get enough people to click on fake stories and visit their sites, they’ll make money off the ads they show. By making these scams unprofitable, we destroy their incentives to spread false news on Facebook. So we’re figuring out spammers’ common tactics and reducing the distribution of those kinds of stories in News Feed. We’ve started penalizing clickbait, links shared more frequently by spammers, and links to low-quality web pages, also known as “ad farms.”

We also take action against entire Pages and websites that repeatedly share false news, reducing their overall News Feed distribution. And since we don’t want to make money off of misinformation or help those who create it profit, these publishers are not allowed to run ads or use our monetization features like Instant Articles.

Another part of our strategy in some countries is partnering with third-party fact-checkers to review and rate the accuracy of articles and posts on Facebook. These fact-checkers are independent and certified through the non-partisan International Fact-Checking Network. When these organizations rate something as false, we rank those stories significantly lower in News Feed. On average, this cuts future views by more than 80%. We also use the information from fact-checkers to improve our technology so we can identify more potential false news faster in the future. We’re looking forward to bringing this program to more countries this year.

Informing our community with additional context

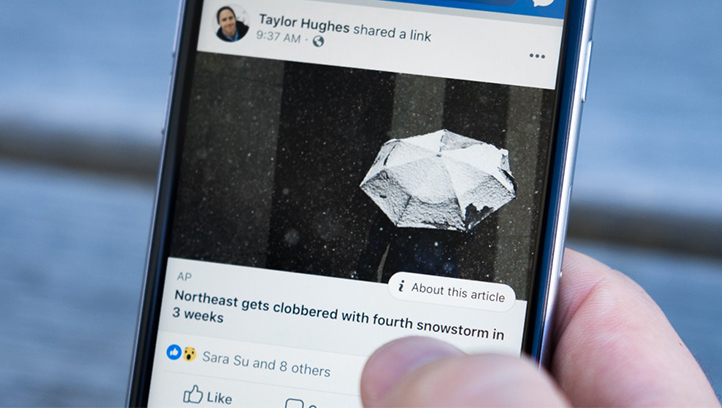

Even with these steps, we know people will still come across misleading content on Facebook and the internet more broadly. To help people make informed decisions about what to read, trust and share, we’re investing in news literacy and building products that give people more information directly in News Feed.

For example, we recently rolled out a feature to give people more information about the publishers and articles they see, such as the publisher’s Wikipedia entry. Another feature, called Related Articles, displays articles from third-party fact-checkers immediately below a story on the same topic. If a fact-checker has rated a story as false, we’ll let people who try to share the story know there’s more reporting on the subject. We’ll also notify people who previously shared the story on Facebook. Last year we created an educational tool to give people tips to identify false news and provided a founding grant for the News Integrity Initiative to invest in long-term strategies for news literacy.

Getting Ahead

As we double down on countering misinformation, our adversaries are going to keep trying to get around us. We need to stay ahead of them, and we can’t do this alone. We’re working with our AI research team, learning from academics, expanding our partnerships with third-party fact-checkers, and talking to other organizations — including other platforms — about how we can work together.

False news has disruptive and destructive consequences around the world. We have an important responsibility, and we know we have a lot of work to do to live up to it. We’ll continue to share updates on our progress and more about our approach in upcoming posts.

You can learn more about our strategy to stop false news in the short film “Facing Facts” on Inside Feed and in this talk from our F8 developer conference: